Workshop 2 - Data importation and exploratory data analysis

23 April 2011

Basic statistics references

- Logan (2010) - Chpt 1, 2 & 6

- Quinn & Keough (2002) - Chpt 1, 2, 3 & 4

Basic syntax

| Name | Entry | Syntax | Class |

|---|---|---|---|

| A | 100 | hint | |

| B | Big | hint | |

| VAR1 | 100 & 105 & 110 | hint | |

| VAR2 | 5 + 6 | hint | |

| VAR3 | 150 to 250 | hint |

- Print out the contents of the vector you called 'a'. Notice that the output appears on the line under the syntax that you entered, and that the output is proceeded by a [1]. This indicates that the value returned (100) is the first entry in the vector

- Print out the contents of the vector called 'b'. Again notice that the output is proceeded by [1].

- Print out the contents of the vector called 'var1'.

- Print out the contents of the vector called 'var2'. Notice that the output contains the product of the statement rather than the statement itself.

- Print out the contents of the vector called 'var3'. Notice that the output contains 100 entries (150 to 250) and that it spans multiple lines on the screen. Each new line begins with a number in square brackets [] to indicate the index of the entry that immediately follows.

Variables - vectors

- The numbers 1, 4, 7 & 9 (call the object y)

- The numbers 10 to 25 (call the object y1)

- The sequency of numbers 2, 4, 6, 8...20 (call the object y2)

- The sequency of numbers 2, 4, 6, 8...20 (call the object y2)

- A factor that lists the sex of individuals as 6 females followed by 6 males

- A factor called TEMPERATURE that lists 10 cases of 'High', 10 'Medium & 10 'Low'

- A factor called TEMPERATURE that lists 'High', 'Medium & 'Low' alternating 10 times

- A factor called TEMPERATURE that lists 10 cases of 'High', 8 cases of 'Medium' and 11 cases of 'Low'

Data sets - Data frames(R)

Rarely is only a single biological variable collected. Data are usually collected in sets of variables reflecting tests of relationships, differences between groups, multiple characterizations etc. Consequently, data sets are best organized into collections of variables (vectors). Such collections are called data frames in R.

Data frames are generated by combining multiple vectors together whereby each vector becomes a separate column in the data frame. In for a data frame to represent the data properly, the sequence in which observations appear in the vectors (variables) must be the same for each vector and each vector should have the same number of observations. For example, the first observations from each of the vectors to be included in the data frame must represent observations collected from the same sampling unit.

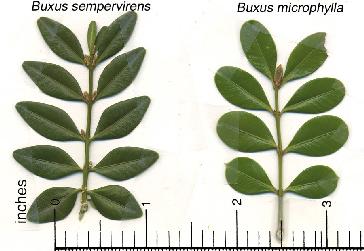

To demonstrate the use of dataframes in R, we will use fictitious data representing the areas of leaves of two species of Japanese Boxwood

| Format of the fictitious data set | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|||||||||||||||||||||||||||

- First create the categorical (factor) variable containing the listing of B.semp three times and B.micro three times

- Now create the dependent variable (numeric vector) containing the leaf areas

- Combine the two variables (vectors) into a single data set (data frame) called LEAVES

- Print (to the screen) the contents of this new data set called LEAVES

- You will have noticed that the names of the rows are listed as 1 to 6 (this is the default). In the table above, we can see that there is a variable called PLANT that listed unique plant identification labels. These labels are of no use for any statistics, however, they are useful for identifying particular observations. Consequently it would be good to incorporate these labels as row names in the data set. Create a variable called PLANT that contains a listing of the plant identifications

- Use this plant identification label variable to define the row names in the data frame called LEAVES

- In the textbox provided below, list each of the lines of R syntax required to generate the data set

The above syntax forms a list of instructions that R can perform. Such lists are called scripts. Scripts offer the following;

- Enable a sequence of tasks such as data entry, analysis and graphical preparation to be repeated quickly and precisely

- Ensure that the sequence of tasks used to complete an analysis are permanently documented

- Simplify performing many similar analyses

- Simplify sharing of data, analyses and techniques

- close down R

- restart R

- Change the working directory (path) to the location where you saved the script file in Q2-2 above

- Source the script file

- Print (list on screen) the contents of the AREA vector. Note, that this is listing the contents of the AREA vector, this is not the same as asking it to list the contents of the AREA vector within the LEAVES data frame. For example, multiply all of the numbers in the AREA vector by 2. Now print the contents of the AREA vector then the LEAVES data frame. Notice that only the values in the AREA vector have changed - the values within the AREA vector of the LEAVES data frame were not effected.

- To avoid confusion and clutter, it is therefore always best to remove single vectors once a data frame has been created. Remove the PLANTS, SPECIES and AREA vectors.

- Notice what happens when you now try to access the AREA vector.

- To access a variable from within a data frame, we use the $ sign. Print the contents of the LEAVES AREA vector

| Access | Syntax |

|---|---|

| print the LEAVES data set | hint |

| print first leaf area in the LEAVES data set | hint |

| print the first 3 leaf areas in the LEAVES data set | hint |

| print a list of leaf areas that are greater than 20 | hint |

| print a list of leaf areas for the B.microphylum species | hint |

| print the section of the data set that contains the B.microphylum species | hint |

| alter the second leaf area from 22 to 23 | hint |

Importing data and data files

Although it is possible to generate a data set from scratch using the procedures demonstrated in the above demonstration module, often data sets are better managed with spreadsheet software. R is not designed to be a spreadsheet, and thus, it is necessary to import data into R. We will use the following small data set (in which the feeding metabolic rate of stick insects fed two different diets was recorded)to demonstrate how a data set is imported into R.

| Format of the fictitious data set | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|||||||||||||||||||||||||||

- Enter the above data set into Excel and save the sheet as a comma delimited text file (CSV) . Ensure that the column titles (variable names) are in the first row and that you take note where the file is saved. To see the format of this file, open it in Notepad (the windows accessory program). Notice that it is just a straight text file, there is no encryption or encoding.

- Ensure that the current working directory is set to the location of this file

- Read (import) the data set into a data table . Since data exploration and analysis cannot begin until the data is imported into R, the syntax of this step would usually be on the first line in a new script file that is stored with the comma delimited text file version of the data set.

- To ensure that the data have been successfully imported, print the data frame

- Examine the contents of this comma delimited text file using Notepad

Population parameters

The little spotted kiwi (Apteryx owenii) is a very rare flightless bird that is extinct on mainland New Zealand and survives as 1000 individuals on Kapiti Island. In order to monitor the population, researches in the recovery team systematically captured all of the individuals in the population over a two week period. Each individual was weighed, banded, assessed and released. The file *.csv lists the weights of each individual male little spotted kiwi in the population.

Download Kiwi data set

| Format of kiwi.csv data file | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||||||||

Open the kiwi data file. HINT.

Generate a frequency histogram of male kiwi weights. HINT. This distribution represents the population (all possible observations) of male kiwi weights. Note that this is the statistical population and not a biological population - obviously a biological population entirely lacking in females would not last long!- Mean HINT

- SD HINT

Assuming, the population is normally distributed, it is possible to calculate the probability that a randomly recaptured male kiwi will weigh greater than a particular value, less than a particular value, or weigh between a range of weights. This probability is just the area under a particular region of a normal distribution and can be calculated using the normal probabilities.

Samples as estimates of populations

Here is a modified example from Quinn and Keough (2002). Lovett et al. (2000) studied the chemistry of forested watersheds in the Catskill Mountains in New York State. They had 38 sites and recorded the concentrations of ten chemical variables, averaged over three years. We will look at two of these variables, dissolved organic carbon (DOC) and hydrogen ions (H).

Download Lovett data set

| Format of lovett.csv data files | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||||||||||||||

The mean of a sample is considered to be a location characteristic of the sample. Along with the mean, it is often desirable to characterize the spread of data in a sample - that is to determine how variable the sample is.

| Summary Statistic | DOC | Modified DOC |

|---|---|---|

| Mean | HINT |

HINT |

| Median | HINT |

HINT |

| Variance | HINT |

HINT |

| Standard deviation | HINT |

HINT |

| Inter-quartile range | HINT |

HINT |

Exploratory data analysis

Sánchez-Piñero & Polis (2000) studied the effects of seabirds on tenebrionid beetles on islands in the Gulf of California. These beetles are the dominant consumers on these islands and it was envisaged that seabirds leaving guano and carrion would increase beetle productivity. They had a sample of 25 islands and recorded the beetle density, the type of bird colony (roosting, breeding, no birds), % cover of guano and % plant cover of annuals and perennials.

Download Sanchez data set

| Format of sanchez.csv data files | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||||||||||||||

Open the sanchez data file.

| Summary Statistic | No colonies | Roosting colony | Breeding colony |

|---|---|---|---|

| Mean HINT | |||

| Variance HINT | |||

| Standard deviation HINT |

- Which colony type has the greatest variance? (N, R or B)

Normality

Before proceeding, make sure you are familiar with the significance of normally distributed sample data and thus why it is necessary to examine the distribution of sample data as part of routine exploratory data analysis (EDA) prior to any formal data analysis.- Are there any outliers identified? (Y or N)

- Describe the shape of each distribution.

- Now transform the response variable to logs and redraw the boxplots HINT. Does this change (improve?) the shape of the distributions? (Y or N)

Linearity

Often it is necessary to examine the nature of the relationship or association between as part of routine exploratory data analysis (EDA) prior to any formal data analysis. The nature of relationships/associations between continuous data is explored using scatterplots.- Is there any evidence of non-linearity? (Y or N)

- Note, that the boxplots also enable us to explore the normality of both variables (populations). Is there any evidence of non-normality? (Y or N)

Sánchez-Piñero & Polis (2000) measured a number of continuous variables (% cover of guano, % cover or plants and abundance of beetles. Therefore, they might be interested in exploring the relationships between each of these variables. That is, the relationship between guano and plants, guano and beetles, and beetles and plants. While it is possible to create separate scatterplots for each pair (in this case three separate scatterplots), a scatterplot matrix is usually more informative and efficient.

Homogeneity of variance

Many statistical hypothesis tests assume that populations are equally varied. For hypothesis tests that compare populations (such as t-tests - see Question 4), it is important that one of the populations is not substantially more or less variable than the other population(s). Thus, such tests assume homogeneity of variance.- Firstly, is there any evidence of non-normality? (Y or N)

- Try square-root transforming (preferred over log transformation when applying to count data, since log(0) is not legal) the beetle variable (function is sqrt) and using this transformed variable to reconstruct the boxplots. Note that it may be necessary to perform a forth-root transformation (which performing the square-root transformation twice) in order to normalize this highly skewed data. This can be done using the expression to compute as sqrt(sqrt(BEETLE96)) HINT or HINT. If this successfully normalizes the data, focus on whether there is any evidence that the populations are equally varied. Was a forth-root transformation successfull? (Y or N)

- Try calculating the variance or standard deviation

of beetle abundance for each colony type separately (remember to use the transformed data, as the raw data was obviously non-normal and non-normality often results in unequal variances). Do these values provide any evidence for unequally varied populations? (Y or N)

Furness & Bryant (1996) studied the energy budgets of breeding northern fulmars (Fulmarus glacialis) in Shetland. As part of their study, they recorded the body mass and metabolic rate of eight male and six female fulmars.

Download Furness data set

| Format of furness.csv data files | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||||||||||||||

Open the furness data file.

- The biological hypotheses of interest

- The biological null hypotheses

- The statistical null hypotheses (H0)

The appropriate statistical test for testing the null hypothesis that the means of two independent populations are equal is a t-test

Before proceeding, make sure you understand what is meant by normality and equal variance as well as the principles of hypothesis testing using a t-test.- The metabolic rate of males is higher than that females

(one-tailed test)

HINT

- The metabolic rate of males is the same as that of females

(two-tailed test)

HINT

| Assumption | Diagnostic/Risk Minimization |

|---|---|

| I. | |

| II. | |

| III. |

So, we wish to investigate whether or not male and female fulmars have the same metabolic rates, and that we intend to use a t-test to test the null hypothesis that the population mean metabolic rate of males is equal to the population mean metabolic rate of females. Having identified the important assumptions of a t-test, use the samples to evaluate whether the assumptions are likely to be violated and thus whether a t-test is likely to be reliability.

- The assumption of normality has been violated?

- The assumption of homogeneity of variance has been violated?

- What is the t-value? (Excluding the sign. The sign will depend on whether you compared males to females or females to males, and thus only indicates which group had the higher mean).

- What is the df (degrees of freedom).

- What is the p value.

The mean metabolic rate of male fulmars was (choose correct option)

the mean metabolic rate of female fulmars.

Here is a modified example from Quinn and Keough (2002). Elgar et al. (1996) studied the effect of lighting on the web structure or an orb-spinning spider. They set up wooden frames with two different light regimes (controlled by black or white mosquito netting), light and dim. A total of 17 orb spiders were allowed to spin their webs in both a light frame and a dim frame, with six days `rest' between trials for each spider, and the vertical and horizontal diameter of each web was measured. Whether each spider was allocated to a light or dim frame first was randomized. The H0's were that each of the two variables (vertical diameter and horizontal diameter of the orb web) were the same in dim and light conditions. Elgar et al. (1996) correctly treated these as paired comparisons because the same spider spun her web in a light frame and a dark frame.

Download Elgar data set

| Format of elgar.csv data files | |||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||||||||||||||||||

Open the elgar data file.

The mean vertical diameter of spider webs in dim conditions was (choose correct option)

the vertical dimensions in light conditions.

The mean horizontal diameter of spider webs in dim conditions was (choose correct option)

the horizontal dimensions in light conditions.

Non-parametric tests

We will now revisit the data set of Furness & Bryant (1996) that was used in Question 4 to investigate the effects of gender on the metabolic rates of breeding northern fulmars (Fulmarus glacialis). Furness & Bryant (1996) also recorded the body mass of the eight male and six female fulmars they captured.

Since the males and female fulmars were all independent of one another, a t-test would be appropriate to test the null hypothesis of no difference in mean body weight of male and female fulmars.

- What null hypothesis does this test actually evaluate?

- What are the underlying assumptions of a Wilcoxon-Mann-Whitney test?

- Statistical:

- Biological

(include trend):

Randomization (permutation) test

A wildlife ecologist responsible for the management of a significant population of southern brown bandicoot, Isoodon obesulus, was interested in determining the impacts that picnickers were having on the health of bandicoots in the park. In particular, he was interested in determining whether bandicoots that occupied areas frequented by picnickers were heavier (and thus fatter) than bandicoots that occurred in other woodland areas. Fifty adult male bandicoots were captured from picnic and woodland areas and the weights of all individuals were measured.

Download Bandicoot data set

| Format of bandicoots.csv data files | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||||||

Open the bandicoots data file.

When the assumptions of the t-test have been violated, the distribution of all possible t-values cannot reliably be assumed to follow a mathematical t distribution. So, when the null hypothesis is true, and there is no effect of AREA on the WEIGHT of bandicoots, what t-values would we expect.

- Define a new function that accepts a data set and returns an appropriate statistic. In this case, since we are comparing two populations a t-statistic is appropriate. Define an appropriate function .

- Next we need to define a function that alters the structure of the data. In this case, we need to define a function that randomly shuffles the categorical variable (group labels) around. Define an appropriate function .

- Then we use the 'boot()' function to repeatedly calculate the statistic, each time on the randomly altered data. In this case, we want to repeatidly (100 times) calculate the t-statistic from the data set in which the group labels have been randomly shuffled. Perform the bootstrapping .

- Examine the distribution of these t-values (the t-distribution). HINT

- Determine what proportion of resampled t-values are as great or greater than our actual sample t-value.

This then represents the probability of obtaining our sample t-value when the null hypothesis is true, and is thereby interpreted as any other p-value. What is the p-value?.

Power analysis

An ornithologist studying various populations of the Australian magpie, Gymnorhina tibicen, was primarily interested in whether the growth of urban magpies might be stunted as a result of the increased consumption of processed foods. To investigate this hypothesis she intended to measured the total body lengths in centimeters of a number of birds from both urban and rural locations. The null hypothesis of interest is that the population mean length of urban magpies is equal to that of rural magpies and thus a t-test is an appropriate test. Previous research had indicated that the mean body length of rural magpies was 36.87cm with a standard deviation of 2.

- What effect size

is she interested in detecting? HINT

- In order to have an 80% chance of detecting such an effect (if one really exists), how many replicate birds would the ornithologist need to measure from each population (assume significance level of 0.05)? HINT

- Relationship between power and sample size for a range of effect sizes (3, 4, 5 & 6). HINT. Note, you need to load the biology library

- Relationship between power and sample size for a range of standard deviations (1.8,2,2.2). HINT. Note, you need to load the biology library

- Relationship between minimum detectable effect size and sample size for a range of standard deviations (1.8,2,2.2). HINT

- Relationship between minimum detectable effect size and sample size for a range of power (0.7,0.8,0.9). HINT